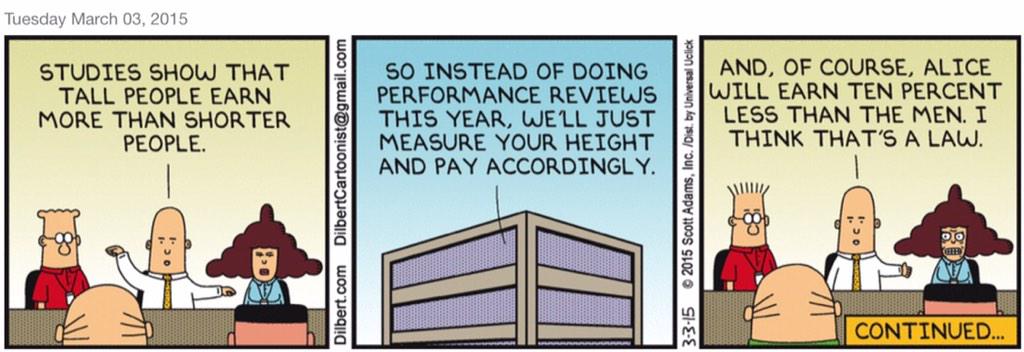

Wicked rules can pervert a community.

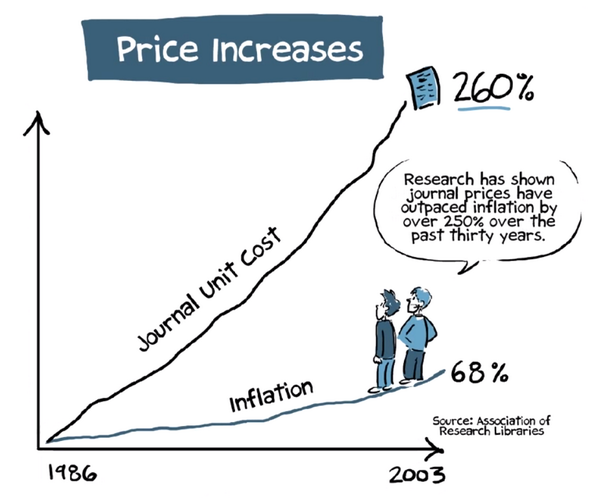

In science, the pioneer wicked rule was evaluating scientists for how much we published, how many papers we coauthored regardless of our real contribution to them, and in what journals do we publish, as a proxy for quality or merit. If you coauthor many articles in good (very cited) journals then you must be a good scientist, that was the institutional mantra. The basic underlaying assumption was that the citations to someone's work by other scientists are a good measure of the importance of her/his research, and I will not question this here. But in this century, to measure the importance of someone's work, we don't need impact factors anymore and we don't need to care about what journal hosts the article in question, because individual citations to each individual article are accessible in a few clicks.

Evaluating by the “mere number of a researcher’s publications” disincentivized risky and potentially-ground-breaking research lacking a short-term publication outcome, as the editor of Science argues. But this is what was being done. And as a result, the scientific articles published every year have nearly tripled since 1990.

|

| Cumulative number of papers published in Biomedicine (source: PubMed via this) |

The same has happened in most countries and in most disciplines, although some have moved much faster than the average, look :-)

| |

|

Does this mean that our science is now three times better? Does it mean that our understanding of Nature grows 3 times faster than 25 years ago? 10 times, in the case of Yoga? Mmm. It does mean that now we can only track a small fraction of all the papers that are relevant to our research.

To compensate for the perversion inherent to this article-count approach, evaluators started weighting that number with something called Journal Impact Factor, assuming that articles published in highly cited journals have a higher, statistically-sound chance to have an impact. Perfect plot for a self-accomplishing prophecy; The warnings against this practice are a clamor.

Then citation databases went online and another wicked parameter came on the scene:

h, the Hirsch index, was adopted in the last decade to come over the number of publications criterion. It has now become a commonplace in the evaluation of proposals. But h keeps overvaluing multi-authored papers beyond reason, because it disregards the number of authors and their relative contribution (in most research areas, the relative scientific contribution of the authors of a paper can be approximated in first approach by the position in the author list). The citations to an article of yours count equally if you are the 1st author or the 100th. Therefore, a paper with 100 authors has 100 times more impact on the evaluation system that a single-authored paper. And I'm not being rhetorical here. Please, meet two of the highest-h scientists in Spain (just an example):

Profile 1: 61k citations, 137 papers in 2013 only, h=112.

Profile 2: 117k citations, 164 papers in 2013 only, h=75.

I leave it to you finding the flaw.

Say you are part of a group of 100 friends, each leading his/her own paper and incorporating the other 99 as coauthors. Each citation to any of those 100 papers will reward 100 more people than citing a lonely author. Imagine now that each of them cites each other's paper. They immediately obtain 100 citations per paper. Not bad. It may seem this is a problem with self-citation that can be filtered easily, but it goes far beyond that. The 100 will still be rewarded beyond reason even if their citations come from someone else. Imagine that another group of 100 authors cites all your 100 papers as well as the lonely author's paper. This will give 100 citations to the single author but 10,000 citations to all others. Note that, if this were the first articles for all of them, then the single author would now be h=1, while all other have been miraculously promoted to h=100.

This problem could be easily solved by weighting each author by 1/2^i , where i is the position of each author in the list. Because

, the weight of all papers would be equal in the citation system. For this, the remainder of the series must be given to the last author, which in practice implies that the last 2 authors get the same credit. Definitely, much fairer than giving all authors a weight of one.

But this was not done. Seemingly, decision makers were not interested in implementing anything like that.

Very predictably, the number of authors per paper just rocketed, and former research groups have often become author pools, with the entire group signing every paper published by each of its members. A symptom of this is that few researchers dare to publish on their own today:

|

| Average number of authors per paper during the last 100 years. Source: PubMed |

|

| % of single-authored papers over the last century. |

|

| Papers published per year. Source |

|

| The left bar indicates the average number of articles published by authors that stopped publishing 15 years after their first publication. The blue bar on the right shows the articles published in the same timespan but by researchers that continued publishing after 15 years. The red bar on top indicates the articles of those same researchers after the 15th year. One can see that the researchers that continue publishing are those having a high research output. It also shows that the research output before the year break is the portion that contributes most to the overall values. Source |

So the drive to scientific publication is still based on quantity, not quality.

Ask any editor how many of their requests to review a manuscript are refused by peers, and you'll learn that they often end up doing the reviews themselves. Too many papers for such few reviewers/authors. It is unsurprising that you find funny bugs like this in articles that were supposed to have been reviewed.

It is difficult to find objective (quantitative) criteria for quality. And perhaps it is also time to question the trust on objective parameters. Alternatives such as interpreting the subjective impact foreseen for a given research are also risky. But if the citation criterion is to be adopted, then we do not need h-like indexes or journal impact factors. There are better metrics proposed (examples), they just need to be adopted. Metrics accounting for the author order and the number of coauthors.

Under the present rules, young researchers are pressed to publish as much as possible instead of publishing as good as possible, not only perverting the research system but also inflating a huge publication bubble. The warning lights are long on. China has already realized the problem and may be soon taking action. Why not Europe? Will we wait until this bubble bursts?

Wicked rules pervert communities, so let's just adopt better rules. In 10 years the science publishing panorama will be unrecognizable anyway.

PD: Interesting discussion in the comment section of this column in last week's Nature.

PD2: Ironically, a journal called Bubble Science just went closed earlier this year.

PD3: A new metric proposed: Author Impact Factor: tracking the individual scientific impact, by Kumar Pan & Fortunato. link

PD2: Ironically, a journal called Bubble Science just went closed earlier this year.

PD3: A new metric proposed: Author Impact Factor: tracking the individual scientific impact, by Kumar Pan & Fortunato. link

PD4: Key literature: The coupling between devaluation of writing in scientifi c authorship and infl ation of citation indices by Terry Engelder: https://rock.geosociety.org/net/gsatoday/archive/17/7/pdf/i1052-5173-17-7-44.pdf

PD5: A new journal now allows publishing citable articles of less than 200 words with a DOI. What next? Citable tweets?

PD5b: A paper reinventing the trapezoidal rule https://t.co/CANpYZ8GkX published in high-impact journal & has 268 citations pic.twitter.com/ECI04VaoQ3 — ∆ Garcia-Castellanos (@danigeos) January 28, 2016

PD6: A PosOne paper by V. Vavryčuk including some references on this: "Fair ranking of researchers and research teams" https://dx.plos.org/10.1371/journal.pone.0195509.

PD2022: China is now the first country by number of peer review publications as well as by most-cited articles. Thousands of scientists publish a paper every 5 days and the number of such authors has multiplied by 20 over 15 years.

No comments:

Post a Comment